The AI Safety Institute, established to ensure the ethical and secure development of artificial intelligence, has been a cornerstone in promoting responsible AI practices. Its mission revolves around safeguarding the deployment of AI technologies by conducting rigorous research, establishing safety protocols, and advocating for robust regulatory frameworks. Since its inception, the institute has been instrumental in bridging the gap between technological advancements and ethical considerations, thus fostering a safe AI ecosystem.

Recognizing San Francisco’s pivotal role as a global tech hub, the AI Safety Institute has strategically decided to expand its presence to the city. San Francisco houses a significant concentration of AI research and development activities, making it an ideal location for the institute to amplify its impact. By situating itself in the heart of innovation, the AI Safety Institute aims to tap into the wealth of expertise and resources available, thus enhancing its research capabilities and outreach.

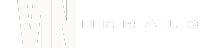

The expansion to San Francisco is not merely about geographical presence; it is a move to foster international collaboration and address global challenges in AI governance. The institute’s presence in such a vibrant tech environment underscores its commitment to working alongside industry leaders, academic institutions, and regulatory bodies. This collaborative approach aims to develop comprehensive safety standards and policies that can be adopted worldwide.

Moreover, this expansion comes at a crucial time when the world is increasingly scrutinizing regulatory shortcomings in AI. The rapid advancement of AI technologies has outpaced the development of adequate regulatory frameworks, leading to potential risks and ethical dilemmas. By expanding its operations to San Francisco, the AI Safety Institute is poised to play a crucial role in addressing these challenges, advocating for stronger regulations, and ensuring that AI technologies are developed and deployed responsibly.

Regulatory Shortcomings and the Role of the Expanded AI Safety Institute

Amid the rapid advancement of artificial intelligence technologies, regulatory frameworks have struggled to keep pace, exposing several critical shortcomings. One of the most pressing issues is data privacy. Current regulations often fail to adequately protect sensitive information, leaving individuals vulnerable to misuse. The ethical implications of AI, particularly in areas like bias and fairness, also raise significant concerns. Existing frameworks frequently lack the sophistication needed to address these nuanced ethical dilemmas, leading to potential societal harm.

Accountability is another area where regulations fall short. As AI systems become more autonomous, determining responsibility for their actions becomes increasingly complex. This gap in accountability mechanisms can lead to situations where harmful decisions made by AI systems go unaddressed. Furthermore, the sheer speed at which AI technologies are evolving outstrips the ability of regulatory bodies to respond effectively. This lag creates a regulatory vacuum, allowing potentially dangerous technologies to proliferate without adequate oversight.

The expansion of the AI Safety Institute to San Francisco aims to address these regulatory gaps through a multifaceted approach. By promoting rigorous research in AI ethics, the institute seeks to develop frameworks that can better navigate the ethical complexities of AI technologies. Robust safety protocols will be a cornerstone of the institute’s work, ensuring that AI systems are designed and deployed with safety as a paramount concern.

Collaboration with policymakers will be crucial. The expanded institute will work closely with regulatory bodies to shape regulations that are not only effective but also forward-thinking, anticipating future challenges rather than merely reacting to existing ones. Examples of past regulatory failures, such as the misuse of facial recognition technology and the Cambridge Analytica scandal, highlight the urgency of this mission. Looking ahead, potential risks such as autonomous weaponry and unchecked surveillance underscore the vital importance of proactive regulatory measures.

By addressing these regulatory shortcomings, the expanded AI Safety Institute aims to create a safer, more ethical landscape for AI technologies, ensuring they benefit society while minimizing potential harms.